Monitor WSO2 Carbon logs with Logstash

The ELK stack is a popular stack for searching and analyzing data. Many people use it for analyzing logs. WSO2 also has a full-fledged Big Data Analytics Platform, which can analyze logs and do many more things.

Logstash is a great tool for managing events and logs. See Getting Started with Logstash if you haven't used logstash.

Following is the complete logstash config file. Save it as "logstash.conf"

Now it's time to run logstash!

We will first test whether the configuration file is okay.

Let's start logstash

Now start the WSO2 AS 5.2.1 server. You will now see log events from logstash.

For example:

I hope these instructions are clear. I will try to write a blog post on using Elasticsearch & Kibana later. :)

In this blog post, I'm explaining on how to monitor logs with Elasticsearch, Logstash and Kibana. I will mainly explain logstash configurations. I will not show how to set up Elasticsearch and Kibana. Those are very easy to setup and there are not much configurations. You can just figure it out very easily! :)

If you want to test an elasticsearch server, you can just extract the elasticsearch distribution and start an elasticsearch server. If you are using Kibana 3, you need to use a web server to host the Kibana application. With Kibana 4, you can use the standalone server provided in the distribution.

If you want to test an elasticsearch server, you can just extract the elasticsearch distribution and start an elasticsearch server. If you are using Kibana 3, you need to use a web server to host the Kibana application. With Kibana 4, you can use the standalone server provided in the distribution.

Configuring Logstash

Logstash is a great tool for managing events and logs. See Getting Started with Logstash if you haven't used logstash.

First of all, we need to configure logstash to get the wso2carbon.log file as an input. Then we need to use a filter to parse the log messages and extract data to analyze.

The wso2carbon.log file is written using log4j and the configurations are in $CARBON_HOME/repository/conf/log4j.properties.

For WSO2 log message parsing, we will be using the grok filter to extract the details configured via log4j pattern layout

For example, following is the pattern layout configured for wso2carbon.log in WSO2 AS 5.2.1 (wso2as-5.2.1/repository/conf/log4j.properties).

log4j.appender.CARBON_LOGFILE.layout.ConversionPattern=TID: [%T] [%S] [%d] %P%5p {%c} - %x %m {%c}%n

In this pattern, the class name ("{%c}") is logged twice. So, let's remove the extra class name. (I have created a JIRA to remove the extra class name from log4j configuration. See CARBON-15065)

Following should be the final configuration for wso2carbon.log.

Now when we start WSO2 AS 5.2.1, we can see all log messages have the pattern specified in log4j configuration.

For example:

Let's write a grok pattern to parse a log message (single line). Please look at grok filter docs for basic syntax in grok patterns. Once you are familiar with grok syntax, it's very easier to write patterns.

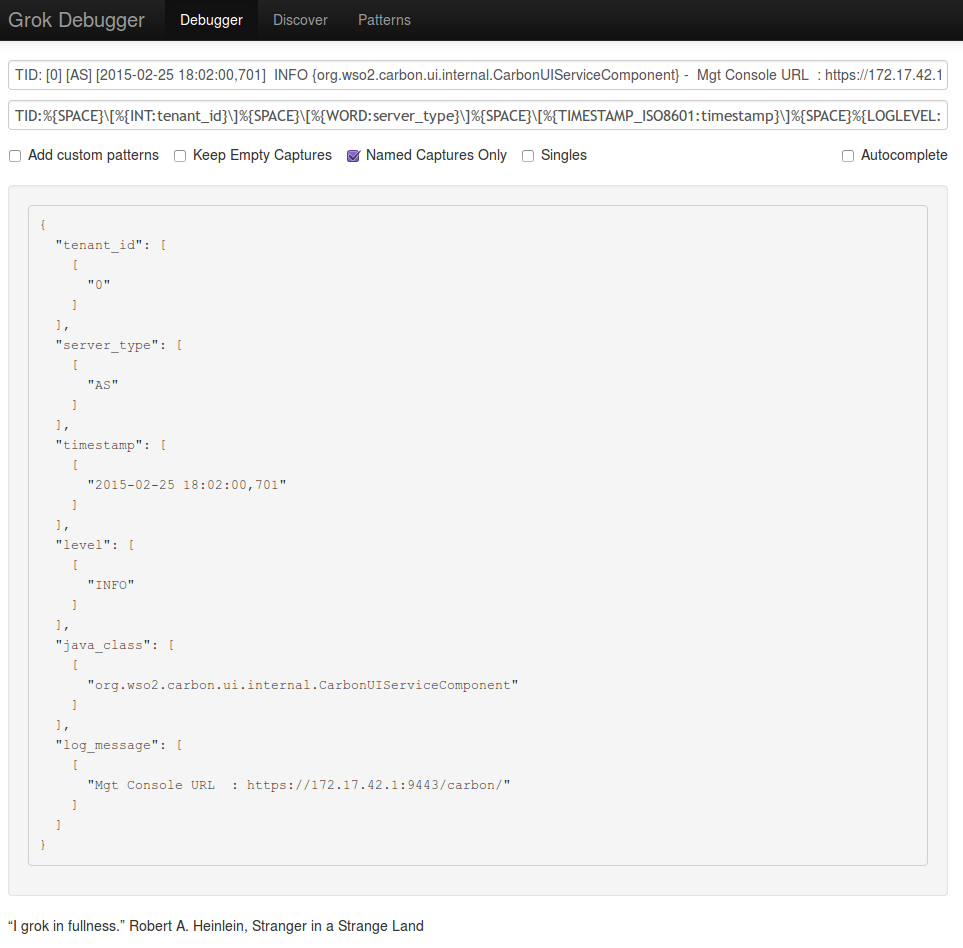

There is also an online "Grok Debugger" application to test grok patterns.

Following is the Grok pattern written for parsing above log lines.

You can test this Grok pattern with Grok Debugger. Use one of the lines in above log file for the input.

We are now parsing a single log line in logstash. Next, we need to look at how we can group exceptions or multiline log messages into one event.

For that we will use the multiline filter. As mentioned in the docs, we need to use a pattern to identify whether a particular log line is a part of the previous line. As configured in the log4j, all logs must start with "TID". If not we can assume that the particular log line belongs to the previous log line.

Finally we need to configure logstash to send output to some destination. We can use "stdout" output for testing. In a production setup, you can use elasticsearch servers.

log4j.appender.CARBON_LOGFILE.layout.ConversionPattern=TID: [%T] [%S] [%d] %P%5p {%c} - %x %m %n

Now when we start WSO2 AS 5.2.1, we can see all log messages have the pattern specified in log4j configuration.

For example:

isuru@isurup-ThinkPad-T530:~/test/wso2as-5.2.1/bin$ ./wso2server.sh start

isuru@isurup-ThinkPad-T530:~/test/wso2as-5.2.1/bin$ tail -4f ../repository/logs/wso2carbon.log

TID: [0] [AS] [2015-02-25 18:02:00,345] INFO {org.wso2.carbon.core.init.JMXServerManager} - JMX Service URL : service:jmx:rmi://localhost:11111/jndi/rmi://localhost:9999/jmxrmi

TID: [0] [AS] [2015-02-25 18:02:00,346] INFO {org.wso2.carbon.core.internal.StartupFinalizerServiceComponent} - Server : Application Server-5.2.1

TID: [0] [AS] [2015-02-25 18:02:00,347] INFO {org.wso2.carbon.core.internal.StartupFinalizerServiceComponent} - WSO2 Carbon started in 29 sec

TID: [0] [AS] [2015-02-25 18:02:00,701] INFO {org.wso2.carbon.ui.internal.CarbonUIServiceComponent} - Mgt Console URL : https://172.17.42.1:9443/carbon/

Let's write a grok pattern to parse a log message (single line). Please look at grok filter docs for basic syntax in grok patterns. Once you are familiar with grok syntax, it's very easier to write patterns.

There is also an online "Grok Debugger" application to test grok patterns.

Following is the Grok pattern written for parsing above log lines.

TID:%{SPACE}\[%{INT:tenant_id}\]%{SPACE}\[%{WORD:server_type}\]%{SPACE}\[%{TIMESTAMP_ISO8601:timestamp}\]%{SPACE}%{LOGLEVEL:level}%{SPACE}{%{JAVACLASS:java_class}}%{SPACE}-%{SPACE}%{GREEDYDATA:log_message}

You can test this Grok pattern with Grok Debugger. Use one of the lines in above log file for the input.

|

| Grok Debugger |

We are now parsing a single log line in logstash. Next, we need to look at how we can group exceptions or multiline log messages into one event.

For that we will use the multiline filter. As mentioned in the docs, we need to use a pattern to identify whether a particular log line is a part of the previous line. As configured in the log4j, all logs must start with "TID". If not we can assume that the particular log line belongs to the previous log line.

Finally we need to configure logstash to send output to some destination. We can use "stdout" output for testing. In a production setup, you can use elasticsearch servers.

Logstash Config File

Following is the complete logstash config file. Save it as "logstash.conf"

input {

file {

add_field => {

instance_name => 'wso2-worker'

}

type => "wso2"

path => [ '/home/isuru/test/wso2as-5.2.1/repository/logs/wso2carbon.log' ]

}

}

filter {

if [type] == "wso2" {

grok {

match => [ "message", "TID:%{SPACE}\[%{INT:tenant_id}\]%{SPACE}\[%{WORD:server_type}\]%{SPACE}\[%{TIMESTAMP_ISO8601:timestamp}\]%{SPACE}%{LOGLEVEL:level}%{SPACE}{%{JAVACLASS:java_class}}%{SPACE}-%{SPACE}%{GREEDYDATA:log_message}" ]

}

multiline {

pattern => "^TID"

negate => true

what => "previous"

}

}

}

output {

# elasticsearch { host => localhost }

stdout { codec => rubydebug }

}

Please note that I have used "add_field" in file input to show that you can add extra details to the log event.

Running Logstash

Now it's time to run logstash!

$ tar -xvf logstash-1.4.2.tar.gz $ cd logstash-1.4.2/bin

We will first test whether the configuration file is okay.

$ ./logstash --configtest -f ~/conf/logstash.conf

Using milestone 2 input plugin 'file'. This plugin should be stable, but if you see strange behavior, please let us know! For more information on plugin milestones, see http://logstash.net/docs/1.4.2/plugin-milestones {:level=>:warn}

Configuration OK

Let's start logstash

$ ./logstash -f ~/conf/logstash.conf

Now start the WSO2 AS 5.2.1 server. You will now see log events from logstash.

For example:

{

"message" => "TID: [0] [AS] [2015-02-26 00:31:41,389] INFO {org.wso2.carbon.core.internal.StartupFinalizerServiceComponent} - WSO2 Carbon started in 17 sec",

"@version" => "1",

"@timestamp" => "2015-02-25T19:01:49.151Z",

"type" => "wso2",

"instance_name" => "wso2-worker",

"host" => "isurup-ThinkPad-T530",

"path" => "/home/isuru/test/wso2as-5.2.1/repository/logs/wso2carbon.log",

"tenant_id" => "0",

"server_type" => "AS",

"timestamp" => "2015-02-26 00:31:41,389",

"level" => "INFO",

"java_class" => "org.wso2.carbon.core.internal.StartupFinalizerServiceComponent",

"log_message" => "WSO2 Carbon started in 17 sec"

}

Troubleshooting Tips

- You need to run the server as the file input will read only new lines. However if you want to test a log file from the beginning, you can use following input configuration.

input {

file {

add_field => {

instance_name => 'wso2-worker'

}

type => "wso2"

start_position => "beginning"

sincedb_path => "/dev/null"

path => [ '/home/isuru/test/wso2as-5.2.1/repository/logs/wso2carbon.log' ]

}

}

- When you are using multiline filter, the last line of the log file may not be processed. This is an issue in logstash 1.4.2 and I didn't notice the same issue in logstash-1.5.0.beta1.

I hope these instructions are clear. I will try to write a blog post on using Elasticsearch & Kibana later. :)

Comments